Integrating Aegir with Linux and FTP

Posted by cafuego on Tuesday 8 November 2011.Due to the insane cost of bandwidth (compared to the rest of the developed world) in Australia, I've recently decided to move some of our hosting clients to Linode. This means they can move more data more cheaply and I don't need to come up with (and administer) a bandwidth accounting system for my Australian based web VM.

We pretty much exclusively use Drupal for hosting clients, so to make management a bit easier I decided to use Ægir on the new Linode. Installation was a relative breeze, after a quick google to find out how to specify that I didn't want to use Apache and wanted to use a separate server as dedicated MySQL host.

The problem (there is always a problem) arose when I needed to give a hosting client access to their Drupal installation, so they could manage themes and site-specific modules. Just adding an account and providing SSH access was out of the question, as all sites are stored under a single system user. Anyone logging in with permissions to edit their own Drupal can then also edit all other sites and even the Ægir installation itself.

FTP would be a solution, as FTP accounts can be chrooted (locked into a specific directory) quite easily, but I didn't want to have to manage a list of FTP accounts separate from the Drupals in Ægir. That isn't the lazysysadmin way.

After a bit of thought I remembered that on an older web host, I had happily used libnss-mysql and libpam-mysql, which integrate accounts defined in a (any) MySQL database with the Linux system. The trick is to get MySQL to cough up the account information in the correct format, so the system can parse it as if these accounts were normal system users.

Part of setting up the new site is importing users and their content profile nodes from a different Drupal site, that was setup a year or two ago to manage an event.

Part of setting up the new site is importing users and their content profile nodes from a different Drupal site, that was setup a year or two ago to manage an event.

I'm in the process of setting up

I'm in the process of setting up  Since I'm a fan of the reliability and automated recovery that InnoDB provides, I use it for all the Drupals that I host. However, on a very busy site, this may lead to deadlocks. These in turn lead to users seeing errors, which is something I'd like to avoid. Especially if the error could be prevented.

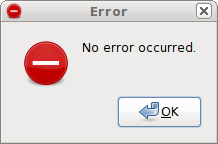

Since I'm a fan of the reliability and automated recovery that InnoDB provides, I use it for all the Drupals that I host. However, on a very busy site, this may lead to deadlocks. These in turn lead to users seeing errors, which is something I'd like to avoid. Especially if the error could be prevented.